(2025-07-14) How to NOT evaluate the power of a quantum computer, and a rant about Ludd grandpas

I had a hard time finding a fitting title for this post, but I felt I had to write something. TL;DR: this is your daily reminder that "How large is the biggest number it can factorize" is NOT a good measure of progress in quantum computing. If you're still stuck in this mindset, you'll be up for a rude awakening. It is also a rant about seasoned but misinformed "experts" who are given, in my opinion, too much credit on topics they don't really understand, with unhealthy consequences.

This morning, I saw this new preprint on the IACR Eprint:

Replication of Quantum Factorisation Records with an 8-bit Home Computer, an Abacus, and a Dog

When I see "catchy" titles like this on the Eprint, my day is typically ruined. I'm immediately on the defensive even without reading the content:

- I know it's either a joke or 99% BS.

- But I also know that most people will not catch it, and will start asking me "hey, have you seen this new crypto paper???".

- Regardless, I know there's going to be a contentious tone implied, and many nasty online arguments in the making.

Sadly, the TikTokization of academic papers made these kinds of titles more and more common: there is so much stuff produced, that for your paper to reach an audience nowadays it has to stand out from the crowd somehow. I think the first title of this kind I saw was the "Faster, Longer, Harder..." thingy, but at least that one had technical merit. I'm sure there are many other "social media fight"-like titles I thankfully didn't see.

Anyway, back to the paper in question from today. This is a special case, because it's one of those papers which do not only have a provocative title, but are meant to be provocative in the tone and the content. The authors are Peter Gutmann and Stephan Neuhaus. Regrettably, I don't know Neuhaus, despite being employed at ZHAW in Zurich, maybe one day I will meet him at some event or for a beer. But I know Gutmann by fame: one of the old "cypherpunk legends" from a past time, front fighter of the 90's Crypto Wars, author of the Gutmann Method to securely erase files from hard drives, and long-time quantum skeptic. Among his works: "On the Heffalump Threat" (a 1-page allegory seeing quantum cryptanalysis as a myth) and "Why Quantum Cryptanalysis is Bollocks", which is more of a slide deck, and on which, from what I can see, most of the new paper is based on. Trying to distill the main claims from this work, they boil down to:

- Most papers claiming quantum factorization records are BS.

- At the end of the day, the best that has been done so far is factorizing 15 as 3x5.

- Therefore, quantum cryptanalysis is BS.

Let me start by saying that I agree with the first point above. Most papers of the form "New Quantum Factorization Record Using Shor's Algorithm" are, let's say, not particularly insightful, and are probably only written to add another publication record. But most of them are also not particularly wrong, just overselling a minor result. Honestly, there is much worse in academia nowadays.

But the underlying issue which annoys me is the repeated, flawed logic I always see brought up by many quantum skeptics. The original "factorize 15" paper was a proof of concept from 2001 (!!!) and it was the first time someone demonstrated a toy-example of quantum factorization. I don't think reputable QC companies have been advertising their progress in terms of "factorization record" in the last decade or so, and we do not care for the many non-reputable ones. But too many people still look at that paper from 2001, say "no larger number has been factorized quantumly so far", and then extrapolate "QC is a scam" from that.

When I ranted about it on Mastodon and LinkedIn today, I realized how flawed my assumption was, that most crypto/infosec professionals would understand immediately what I was talking about. I received many messages, publicly and privately, asking me "but then WHAT is a good measure for QC progress?", so I guess it's a good idea to explain also here why you should disregard quantum factorization records.

The thing is: For cryptanalytic quantum algorithms (Shor, Grover, etc) you need logical/noiseless qubits, because otherwise your computation is constrained to very small (toylike) circuit depth/time. That's because your "operating window" of time for completing your computation is in the order of nanoseconds, after that the quantum error accumulates so much that your results become garbage. With these constraints, you can only factorize numbers like 15, even if your QC becomes 1000x "better" under every other objective metric. So, we are in a situation where even if QC gets steadily better over time, you won't see any of these improvements if you only look at the "factorization record" metric: nothing will happen, until you hit a cliff (e.g., logical qubits become available) and then suddenly scaling up factorization power becomes easier. It's a typical example of non-linear progress in technology (a bit like what happened with LLMs in the last few years) and the risk is that everyone will be caught by surprise. Unfortunately, this paradigm is very different from the traditional, "old-style" cryptanalysis handbook, where people used to size keys according to how fast CPU power had been progressing in the last X years. It's a rooted mindset which is very difficult to change, especially among older-generation cryptography/cybersecurity experts. A better measure of progress (valid for cryptanalysis, which is, anyway, a very minor aspect of why QC are interesting IMHO) would be: how far are we from fully error-corrected and interconnected qubits? I don't know the answer, or at least I don't want to give estimates here. But I know that in the last 10 or more years, all objective indicators in progress that point to that cliff have been steadily improving: qubit fidelity, error rate, coherence time, interconnections... At this point I don't think it's wise to keep thrashing the field of quantum security as "academic paper churning". Albeit I need to stress that I am myself no "quantum doomsayer", and I don't think, or at least have no reason to believe, that "Q-Day is behind the corner" (sigh, this sounds so wrong).

The other point raised by Gutmann and others is that, in the field of cybersecurity, we have so much stuff to worry about, that it is pointless to focus on a theoretical menace that might or might not materialize in the next 20 years. This is a typical example of relative privation fallacy, and it's even worse because it fails to consider that the nature of this "theoretical menace" is so much more disruptive at scale than anything else you could consider today: imagine a world where pretty much all cryptographic signatures, certificates, and asymmetric encryption fail. Can you imagine that world? I'm not sure I can.

And now here is my meta-rant. Just to be clear: I don't mean to apply it to Gutmann in particular. Sure, I disagree with his views, but there are so much worse offenders out there.

I wish people would stop giving absolute credit to "prominent experts" just because they released some book/paper/software of great fame but unproven impact 30 years ago and have since then retreated into golden tenure, writing technically empty but catchy papers with provocative titles, typically mocking or dismissing any form of new technology they don't fully understand. Quantum, Web3, AI, green energy, whatever, there is always a reason to distrust. These "Ludd grandpas" (you know at least a couple of names of who I'm referring to) are, unfortunately, often worshipped by a large number of semireligious followers, who contribute to the spread of their short-sightedness. It's the strength of numbers found in such religious cohorts that empowers these celebrities in making bold claims that would otherwise embarrass anyone else, without fear of repercussion. Because, if an early-career researcher makes similar bold claims and they're proven wrong, then they are ruined for life (or they will be perceived as arrogant "a priori" and be ruined even before having the chance to prove their assertions). But if it's some old and famous expert who went on TV, then whatever, they are not in a position to suffer consequences from their mistakes. They can safely entrench themselves by challenging any opposing but weaker voice in proving them wrong. I find there is such an unfair advantage in saying "I will be right until proven wrong", because in many cases it also implies "and, if one day I'm proven wrong, nobody will care, because I will be tenured/retired and people have the attention span of a hamster anyway". In the meantime, the rants of such VIPs glowing of fossil glory hurt careers, sow an unhealthy distrust for the unknown, and steer policymaking and technical preparedness to dangerous directions.

Looking closer at my own environment, I know we graybeards in infosec have this, I would say healthy tendency, to keep in high respect elder members of the community who somehow distinguished themselves "on the battlefield" (especially against governments and institutional adversaries) in the past. But we need to keep our feet on the ground, and accept that times change, people change, and we can at times be right and at times be wrong. Some people think that, because they have been experts in a certain field long time ago, they can afford not only to keep talking like experts in that field forever, but also in nearby, or even uncorrelated fields. The Dunning-Kruger effect is real.

Thinking deeper, maybe mine is a typical case of rejection for the image in the mirror: I hope I will never become like that in the future.

(2025-05-03) On privacy VS compliance in Web3

I have been thinking for a while about the issue of anonymity in Web3 (and, more in general, anonymous transactions). The growing realization of the damage caused by decentralized financial technologies is nagging my cypherpunk self, who has been at war for a lifetime against invasive tracking, manipulative marketing, and surveillance capitalism. I think it's time that I collect my thoughts here.

Spoiler alert: I'm not endorsing backdoors, but I think some middleground solution must be found.

The problem

Here is the problem. The modern world we live in (where "modern" here means "since currency") has been shaped by the war between the desire of criminals to hide their transactions, and the need of authorities to unmask them. Which is, of course, a super-simplistic way to say that there is a conflict between different parties for the control of transaction flows. This war has somehow seen a crystallization, or stability, for a relatively long time until recently, with a status quo where most transactions were trackable, except cash and very complex border-crossing exchanges which would require the collaboration of mutually very mistrusting parties to be tracked, and are anyway not at the reach of the common folks. I am talking about the pre-2010 world of wire bank transfers, credit card networks, forex, etc. In this world, it was certainly possible for criminals in a suit to move large sums of money from "shell company A in Panama" to "shell company B in Hong Kong", but it was very difficult for Lone Wolf Joe to buy a fake passport online from Dimitri Counterfeitsky, or for large scam/blackmail online operations to be successful on the long run. Sure, there were already plenty of nigerian princes or angolan generals with tons of gold to move abroad, but generally speaking these things were always risky for the perpetrator(s), involving either a physical action (meeting Dimitri in person, crossing a border with a suitcase full of cash, etc), or trusting the assumption that the crime was small enough to not trigger a cross-border collaboration.

The flip of the coin is that, for this status quo to hold, we built an Orwellian machine which was meant to track everyone's transactions. When I imagine how much control do bank institutions and services like Google or Apple have over our finances, I think it's a shame how we missed out so much on this and we still don't have, e.g., a way for authorities to get a super-accurate tax assessment from every citizen in an automated way. I mean, if we need to embrace dystopia, let's at least reap the few good sides of it! The only invisible transactions would be those carried over in cash, which is on its way to be banned anyway, and it already accounts only for a rounding error at a state level. (Disclaimer: I use cash any time I can, and I hope I'll be able to do so as long as possible)

Enter Bitcoin. Anarco-capitalists enthusiastically looking forward to a world of untraceable transactions. More well-informed people would point out how, no, Bitcoin doesn't make anything anonymous at all, actually it's even more traceable than normal bank transactions. And yet, more forward-looking people would say that it doesn't matter: Pandora's box was open, it would be just a matter of time.

This last camp was right. Bitcoin was just the beginning, the missing piece to make pre-existing anonymous transaction ideas really work. Monero arrived, then Zcash, then TornadoCash, then many others. We are now at the point where people are building fully decentralized and private computing platforms. Cryptographic research in zero-knowledge proofs (ZKP), multi-party computation (MPC), and fully homomorphic encryption (FHE) has exploded. All these technologies have their flaws, but overall they work: it is now possible to send in a completely anonymous way a token of a certain value to another address, without anyone else not involved in the transaction being able to see sender, amount, or receiver of the transaction. This is a cypherpunk's dream.

And soon the woes arrived. Bitcoin and friends de facto enabled ransomware, as well as money laundering and tax evasion on a massive scale, providing means and incentives and giving rise to criminal industries that would simply be unthinkable without anonymous payment tech. Allegedly, North Korea financed its whole nuclear missile program through ransoms collected by their cyberattack operations, and it is pretty certain that Russia is massively using web3 technology to circumvent sanctions, just to name two cases. Luckily, no shady business ever happens in the western world thanks to cryptocurrencies, amirite?

And almost everybody is happy about it! DeFi, as it is now, does not stand for "Decentralized Finance", but for "Deregulated Finance". Those same criminals in suit who were previously moving sums between shell companies, today are doing the same between crypto exchanges and smart contracts. When intentional fraud is not involved, sloppy security or mishandling are. See this link for a long list of disasters in Web3. Bitcoin anarco-capitalists absolutely do not want compliance (except when they're hacked, then they go crying to FBI). Banks do not want compliance, because it allows them to diversify and play with high-risk investments without repercussions. So, anonymous cryptocurrency is great after all!

Except that this creates a toxic environment ripe for abuse, which makes [dictator] smile, and overall makes most humans more miserable and target of remote crime. So, no, not everyone is happy about it. Not necessarily for the noblest reasons, but governments and police authorities are cracking down on anonymous fintech. There is a growing interest in the field of "compliance" in Web3, where chains and institutions are trying to collaborate to provide a way to enable banks and other regulators to perform anti-money-laundering (AML) and know-your-customer (KYC) duties on private transactions, the same way regular banks are required by regulators to do in order to avoid financial fraud. But so far all the proposals boil down to the cops' old recipe: backdoors. Let's insert a "lock for the good guys only", so that authorities can trace everything but criminals cannot. Yeah, right.

So, on one side we have backdoors, on the other side we have [dictator] becoming fatter and happier by the day. Is there no hope?

My view on it

What I described above is, at the end, a typical case of weighting pros and cons of a newly introduced tool or technology. When faced with such dilemma, my gut reaction is to say "the overall effect on society is positive, new tech is always disruptive, this is good". Especially when we're talking of privacy technology, given the sad state of the world as it is now, it's in my opinion fair to lean toward embracing such new tech, and consider the "usual boogeymen" as "acceptable collateral damage". You know what I'm talking about: I don't think Tor creates more drug addicts or makes more children to be abused.

And here is the crux of my problem: after having witnessed for many years the damage enabled by anonymous payments, I am not so sure anymore that the net effect on society is positive in this case, or even that it will eventually be positive many years from now. Hardcore libertarians cherish a world without taxes, without police or government even, where everyone is responsible for themselves, and nobody else can have any say in the way they conduct their business. At my age, I find this view to be childish and delusional at best, arrogant and psychopathic at worst. We literally built a millennia-old civilization on the premise that, no, we're not left to fend for ourselves alone in the dark, there are other people around us, and they are a valuable resource. We need rules to make sure that one's rights do not trample someone else's, and we need authorities and mechanisms to enforce such rules. I do not think that we, as a society, are ready for such a massive shift of paradigm, where I can anonymously pay a hitman to murder my neighbor.

"But wait", you might say, "the crime committed in your example is the murder, not anonymity!". I find this cheap philosophy not very useful: modern anonymous payment technology works pretty well, there is basically no way to catch someone like that without additional circumstantial hints, except of course human errors. You might have read this very old post that I wrote many years ago, and that I still agree with: the seriousness of a crime should be roughly evaluated as the damage caused by the crime divided by the probability of being caught and prosecuted for that crime. It's the only way that makes sense. If this is the case, how should we evaluate the seriousness of a crime committed with a technology that makes the crime almost perfect?

But I acknowledge that even this is a slippery slope. If we follow this reasoning, then it makes sense to ban end-to-end encryption (E2EE) as well, right? Because otherwise police authorities would have no way of prosecuting criminals, and then satanists and rapists and blacktransqueermuslims etc etc, right?

No, I am not advocating for backdoors, not in the case of E2EE, anonymous transactions, or anything else. But not for philosophical reasons: I am not advocating them because I know that they don't work. Because there is no such thing as "lock for the good guys only", and I find it unbelievable that there are still people trying to gaslight the public opinion in this sense, as if believing it would make it magically possible.

So we're back at square one with this long rant. Can a compromise exist, between the holy individual right to privacy, and the collective need to audit transactions and prevent crime?

My proposal

I had the opportunity of tackling this very problem during my research at Horizen Labs. This is not an endorsement of my employer, I don't know if what I'm trying to build will eventually see the light, this is just personal research on a topic which may or may not catch interest from the company - or from anyone else. Nothing of what I write here is confidential, I just wanted to share my view on the matter.

I think the first question to ask ourselves is: what does "privacy" mean?. Without going too much in the technical (or even philosophical) details, in the realm of Web3 transactions this is pretty obvious: there are exactly three things that make a transaction "private": the identity of the sender, the identity of the receiver, and the amount transferred. Depending on the technology implementation, you might also want to hide the id of the token itself. Modern privacy tech for Web3 hides some or all of these data fields, typically by using ZKP or some variation therein: instead of "Alice sending a token to Bob", Alice broadcasts (for everyone to see) a zero-knowledge proof of a statement like: "1) I am the owner of a token of a certain value, and 2) I have transferred ownership of a new token of the same value to another address, and 3) I have burned (erased) my previous token, so I cannot spend it anymore".

Now, if we want to introduce "compliance", then it is clear what we have to do: we have to append extra information to a transaction, only readable by authorized parties, which declassifies those three pieces of data above. And the way we manage this "readable by authorized parties" makes all the difference between a "backdoor" and a "more reasonable system".

And what would such "reasonable" system look like? More than a proposal this is a wishlist. These are the five minimal properties I think should be required by any sane "compliant privacy" mechanism:

- Decentralization: regardless of anything, there should be no single point of failure in the trust model for declassification. No single authority should "hold the key" for inspecting a user's transaction. Using MPC technology, this power should be split by a quorum of trustees in a committee.

- Accountability: whenever a regulator decides to audit and deanonymize a transaction, this fact should be publicly visible and reported. Picture this as "the name of the judge on the search warrant". This property is crucial, it is the only safeguard against authorities colluding and abusing their power.

- Warranty: if I am under investigation, I must be notified of this ("avviso di garanzia", this seems to be common in Italian law but not sure about other legislations).

- Opt-in: users must decide what kind of information to make available to which regulators and under which conditions. In other words, users should attach a compliance manifest to each transaction. Other nodes of the network will decide whether to accept or not the transaction according to their own compliance policy.

- Programmability: users can revoke and modify their decision, on a per-transaction basis.

The first property is obviously about security, and should be taken seriously. For example, key shares should be split among parties who naturally do not trust each other and/or do not have any incentive in colluding except for investigating a mutually damaging crime.

The second and third properties are about keeping authorities accountable for abuse. You don't want a system where the authority "pinky-swear promises" to only wiretap 100 transactions per month without having a way to verify this. Ideally, a good system should allow everyone to see that a certain authority has decided to leverage its power for an investigation, but should only allow the target of the investigation to be notified as such. It could in theory be done in a layered way, e.g., the judge is notified whenever a police officer uses this power, but the general public does not, although, personally, I think public authorities should report to the public, not to other authorities. In any case, you don't want the public to know the identity of the specific officer (for their safety) or of the investigated party (for dignity).

Finally, the fourth property ensures, on one hand that an incentive mechanism pushes users to balance privacy VS compliance: if I set my privacy requirements too high for this transaction, then few nodes will take the risk of accepting it, and will therefore request higher fees from me. On the other hand, it makes sure that information that the user absolutely does not want to reveal remains hidden. The fifth property makes the system flexible in practice.

There are systems like GNU Taler which, in my opinion, make a lot of sense. In a nutshell, Taler transactions are semi-anonymous: the identity of the sender is always hidden, but the identity of the receiver is not. This mimics real-world shops, where the bakery does not ask for my id to sell me bread when I pay in cash, but the bakery itself is not anonymous and the owner is known. Taler is not decentralized though, it's a PKI-style tech. I would like a "good" privacy-compliance Web3 tech to be able to mimic Taler if the user so wishes.

Does a technology like this exist already? I don't know, it might certainly do, since the Web3 world is moving so fast. If not, will it ever be built? And, in either case, will it ever reach adoption?

Open to hear your feedback, please reach out!

(2025-04-02) On China, racism, and the mysterious disappearance of cybersecurity academic Xiaofeng Wang

You might have read about the very recent news on the mysterious disappearance of US-based cybersecurity professor Xiaofeng Wang and his wife. The story is in evolution, and things might get updated fast, so this is just a very preliminary impression and my thoughts on the matter since some people asked me about it. I leave some pointers below for the detailed report, but here is a quick recap of the story.

Xiaofeng Wang is a prominent US-based chinese computer scientist, with tons of publications in areas such as cryptography, cyber security, AI, bot and fraud detection, medical and biometric security, and more. He was professor at Indiana University Bloomington (IUB), while his wife, Nianli Ma, was system analyst and programmer at the same university. They both recently disappeared in mysterious circumstances: Wang's students have been unable to contact him since mid March, and his university's webpage has been taken down on March 19th. He and his wife have been "canceled" from all directories at IUB. On March 28th, the couple's two houses have been raided by FBI agents. It was later revealed that, on that same day, Wang received a letter from IUB terminating him with immediate effect, apparently because they found out that he had accepted a new position in Singapore, and was supposed to start his new role this summer. As of today, Wang and his wife could not be reached, IUB declines to comment, and FBI only acknowledged "court-authorized law enforcement activity".

Everyone is trying to figure out what happened, and all the people who could shed some light on the matter are not speaking. In this climate of uncertainty, wild guesses are not good, but let me try nonetheless.

I have never talked with Wang personally, but I think we crossed at some conferences at some point. I know some of his papers, which seem to denote a preference for collaboration mostly with other chinese scientists. He was involved in very sensitive topics: AI security, genomic data protection, and more.

Reading comments online, conspiracy theories abound, but it seems to me that everyone is afraid of spelling out loud one of the most plausible explanations: Dr Wang was performing espionage for the chinese government, he got caught, but chinese secret services alerted him and helped him into hiding. Let me be very clear: I am not saying this is what I think happened, but it seems to me one possible explanation (he wouldn't be the first one). Still, most "polite" comments I read online, even in the infosec community, are kind of la-la-la dodging this scenario.

Why? Is it because of fear of being associated with racism in these tumultuous times? Before exploring alternative explanations for Wang's disappearance, I think we have to talk about Xi's China and Trump's America.

My stance on the CCP is not a secret for anyone who knows me. My wife is taiwanese (meaning: from the country of Taiwan) and I have fought my whole life against invasive surveillance and authoritarianism. Still, I consider myself blessed enough to have had a proper education, had the opportunity of meeting many brilliant chinese people, and in general living in a "bubble" where there is respect for different cultures and where, generally speaking, nationalities do not matter that much. Yes, it's still a bubble, but better than other bubbles. This is just to say that I do not generally buy in the "China bad" narrative, but it seems undeniable to me that China has proven over and over again to be very competent and motivated in terms of espionage capabilities. So, not knowing him personally and unable to assess the character of the individual, to me the possibility that Wang was indeed performing espionage for the chinese government would not be particularly surprising.

The Captain Obvious disclaimer is that a chinese-sounding name does not necessarily imply ties to CCP, or even to China itself. I personally know americans with chinese-sounding names who can't even speak Mandarin. But in Trump's America, a chinese-sounding name brings a lot of... extra connotations. So, it's also natural to look at other explanations for Wang's disappearance.

I have read theories according to which the FBI raid was racially motivated. In 2025, this is sadly not unthinkable. But I cannot fathom this being the most straightforward explanation, there must be something more. One explanation that I think makes more sense is that the raid was indeed fueled by the current US political climate, but ultimately triggered by IUB as a retorsion. We don't know exactly the circumstances around Wang's acceptance of the Singapore position and IUB's finding out, and there is also rumors about IUB taking disciplinary action for Wang's failure to disclose ties to China when receiving a previous grant. This is just speculation, it is difficult to say since nobody is talking. But the scenario I am depicting is, somebody high-up at IUB finds out that Wang has secretely negotiated a new position and is about to go poof, not only creating difficulties for teaching and academic organization, but also jeopardizing some personal ambitions connected to Wang's grant and the prestige it brought to IUB. In this situation, a way out could have been to "express concern on Wang's ties to China" with the FBI, and use this as a plausible excuse to cut ties with Wang. Again, I am not saying this is what I think it happened, but I think this explanation makes more sense than the FBI saying "uhm, we haven't reached our weekly quota of blacks, latinos and asian simps to raid, let's resort to academics".

Or, of course, it could also be that the conspiracy theories are right. Maybe Wang found out something big and was silenced. Maybe he discovered something very bad or embarrassing for NSA/FBI/DOGE. In our field, this is unfortunately not unheard of.

In any case, Matt Green is right: this is not normal, we can't pretend it is. It seems already clear that IUB did not follow the due process for terminating a tenured faculty member. But, in today's America, nobody respects the law anyway, and people can be "canceled" as if their very being exists only subject to the benevolent approval of someone higher up in the food chain. This is the world we live in, I don't like it, and you shouldn't either.

(2025-03-13) NIST selects HQC as fifth algorithm for quantum-resistant encryption

NIST has announced the end of the evaluation phase for adding another cryptographic algorithm to the suite of ciphers designed to be resistant against quantum computing. The winner is HQC, which is now going to enter the standardization phase.

HQC is a code-based KEM (used for encryption rather than signatures) which has been selected on mainly two criteria: being reasonably performant, and not being based on lattice assumptions (because there is already ML-KEM for that, and you want a backup option in case cryptanalysis one days breaks lattice-based problems).

Personally, I would have also liked to see Classic McEliece standardized: this is a scheme which is based on still different hardness assumptions, very robust, and has the property of having extremely small ciphertexts. The drawback is the large size of the public key, but public keys can be distributed once and then this amortizes the overall cost, so that it makes a lot of sense for certain applications, but NIST decided that this would have been too much of a "niche" use case.

In an impetus of productivity, I decided to perform a minor restiling of this website. Among these novel breakthrough innovations of science, you will find:

- I added a picture of a handsomer version of myself (circa 2018) in the header, so that search engines do not index Whit Diffie's picture as a preview of my links anymore.

- Added icons with links to connect via email or Jabber/XMPP, plus link to my Mastodon account.

- Started using ISO-8601 for date format, because why not.

- Hyperlinks color now does not cause your eyes to explode.

- General but very incomplete HTML sanitization.

But the most awesome part is the addition of an RSS feed, which now boldly brings this website in the year 2001. Now, this is what progress looks like!

(Mar 12th, 2025) On cashless restaurants and QR menus.

Yesterday night I went out with a friend in Zurich, and we were searching for a place to have dinner. We entered this popular-looking Thai-fusion restaurant. Only after we had a seat we discovered that:

- There was no paper menu, just a QR code.

- The QR code was not pointing to a PDF menu, but to an ordering website full of trackers.

- "We are a cashless restaurant".

- "Cash tips are welcome".

We left the place and went to eat somewhere nearby.

In a very timely manner, here is what I found on Slashdot this morning. I can confirm the findings!

Let's leave security and privacy considerations aside for a second. I already use a screen too much during the day, thank you, I would rather not fiddle with my phone if I go out eating. And, what exactly should I "tip" for?

Oh, and please don't hit me with the environment BS, I really don't think that a bunch of paper menus have any practical impact in the great picture of the environment footprint of a restaurant. The real reason restaurants go for QR menus are: user tracking, possibility of surge pricing, and reduced costs. But I also suspect that there is some component of "hip" factor. You know, "young, cool people do everything with a phone nowadays".

I think I like the idea in this Slashdot comment. I might print my own stickers.

(Feb 20th, 2025) Quantum Computing news: Microsoft announces first TOPOLOGICAL qubit!

I just want to briefly report about the recet news from Microsoft, who announced the realization of the first topological 1-qubit device. You might wonder, why is this important? One qubit doesn't sound too much exciting, and surely you can't do anything useful with it. Well, the interesting part is topological.

I still remember my very first PhD trip abroad: the 12th Canadian Summer School on Quantum Information in Waterloo, ON, in 2012. There, I first learned about topological QC in a lecture by Steve Simon (not to be confused with the other Simon). The rough idea: instead of using the "traditional" approach of QC, where you build a qubit by manipulating an extremely small and fragile system, like a single atom, in topological QC instead you use a system composed of a 2D grid of many small objects whose interactions follow a pattern that you can "move around" the grid without moving the objects, and you represent the state of a qubit as the global topological property of this pattern of interactions (think of it as a knot of braids in space-time). As these patterns can "braid around" in loops, changing the state of a qubit means unlooping this "space-time knot" and re-looping it differently, which is extremely difficult, and therefore you obtain a system which is "natively" stable and resistant against decoherence, unlike traditional fragile systems (ion traps, superconducting qubits, etc) which are constantly disturbed by the environment, and require constant and expensive error-correction to remain stable.

The idea sounded too good to be true, and in fact it was. The catch is that these "small objects" from which you build your 2D grid must behave very "weirdly" in order for these "braidable interactions" to appear: they cannot be fermions, nor bosons, but a new type of "virtual particle" called non-Abelian anyon, which only exists in 2D but not 3D. It was not even clear whether these particles could exist in nature, so for a long time topological QC was considered kind of fringe, a few attempts at detecting anyons over the decades have given mixed results, so not many were willing to invest in this kind of research.

Except Microsoft. Microsoft has been championing the idea of topological QC for a long time, but (I think) more as a differentiator to other competitors like Google and IBM. Regardless, for many years now topological QC was only investigated at MS.

Now it seems like we finally have some news: Apparently, Microsoft has managed to create non-Abelian anyons with simple household material (indium arsenide - aluminium nanowires, you surely have some in your pantry), and they showcased a 1-qubit device built with this technology, plus a roadmap to scale the device to fully error-corrected qubits in the future.

Is this cool? Yes, I think it absolutely is.

Is this practically promising? I have no idea. Even Scott Aaronson does not know.

(Feb 18th, 2025) Battle of Instant Messengers: my view on Signal VS Matrix VS XMPP/Jabber VS others.

People often ask me what IM (instant messenger) I prefer and why. If I want to joke, I might link to this XKCD, or I might give one of the following answers:

Kidding apart, as a paranoia-lover hacktivist, I try to give recommendations which, I think, lead the world toward a better state according to my views. The advice I give in this post might not reflect the best option for you personally, and is limited by my (lack of) knowledge. But let's give it a try.

So, what are the options? There are literally thousands, and I do not pretend to know them all, not even a small part. But let's start with the easy ones.

Whatsapp

You know what I think of Whatsapp already. It is so popular that in many places (in Italy, for example) it has become the de facto standard of communication, even with public offices or commercial entities. I find this not only extremely problematic for privacy and national security, but utterly disgusting in general. I do not use Whatsapp, and I must admit that it's hard. The only good news here is that this app is becoming mainly most popular among boomers-to-millennials generations, so it's hopefully on its way to decline.

Line

It's basically an Asian Whatsapp. Do not.

Telegram

This is even worse than Whatsapp for so many different reasons I don't want to spend time on. I find it mind-boggling that it has become so popular even among circles like cryptobros and some anarchists.

WeChat

If there is one app even worse than Telegram, this must be it. Installing WeChat on your device means installing a backdoor for the Chinese Government to spy on you.

Threema

Once touted as a "Swiss privacy-oriented IM solution", over the years it has shown so many issues that it has completely lost my trust. Luckily, its adoption is quite limited.

Discord

I wish Discord to burn in Hell. I don't even know how it became so popular. It's basically IRC, but with ads, super annoying notifications, and completely centralized. They even mislead the users by calling "servers" what actually are "channels".

Slack

You must really like pain if Slack is your to-go IM of choice. Personally, I hate Slack, but in a corporate environment I think it is a better alternative to Teams or Google.

Mattermost

More or less like Slack. Do not use it if you don't have to.

Let's get to more "serious" options. The following are the 3 main IM solutions that most privacy/security advocates would recommend. However, there are usually very strong opinions on what is "the best". Following are my views on it.

Signal

Signal has been, for quite a few years now, the golden standard for messaging security. You get a Whatsapp-like experience, but with top security features, quantum-resistant crypto, privacy-by-default and, most importantly, leadership and roots with a top track of ethical hacktivism and cypherpunk values. I use Signal daily with family and most of my friends, and it's the standard recommendation for most people. The usability is unbeatable.

But.

There are issues with Signal. First of all, over the years Signal has taken some questionable decisions, like bundling in a shady cryptocoin payment system, and forcing users to adopt a recovery PIN which transmits, albeit in encrypted form, too much data to Signal, under basically the sole assurance that Intel's SGX is secure (LOL). There is also the extremely annoying part that Signal still requires registration via a mobile number (as a 2FA? In 2025? Seriously? Why?). Since last year, Signal finally adopted usernames, but only on top of normal registration via phone number. Moreover, for a long time Signal was only available from the Play Store, and required Google Services to run. Luckily this is not the case anymore: you can install Signal's .apk from their official website (at least for the first time, then it keeps itself updated), but it is still not available on F-droid (understandable, kind of) or Accrescent (not so understandable).

These issues, by themselves alone, are not so terrible to downgrade Signal to "not recommended" IMHO, but they are also not good, and could have been prevented. They are, I think, the symptoms of a much bigger problem with Signal: centralization.

See, Signal is, at the very core, "Whatsapp run by non-evil people". Which is bad, in the sense that you never know what's gonna happen next. Even non-evil people have to eat. Signal is currently living mostly on donations, but things might change in the future. What if VCs jump in? What if Signal is acquired? What if the new CEO is not "same as old CEO"? You, as a user, have no control over this, and I think that digital sovereignty should be your nr 1 concern. To put it differently, even if I have a lot of trust on the folks currently running the show (and I really do!), I think Signal is one of those projects at very high risk of enshittification in the future.

In short, Signal is still one of the easiest and most secure IM choices, and probably the best option to recommend to non-tech people, but I found myself less and less comfortable recommending it to family and friends over time.

Matrix

Matrix is the "cool kid" in the realm of private and secure IM, and many security communities migrated there in the last few years. Matrix is a federated IM service, meaning that anyone can host their own Matrix server, and users can join whatever server they want and still be able to interact with users from other servers, a bit like e-mail. Good security features, works well on mobile, many different clients to choose from, and thanks to its bridges you can connect through Matrix to other services, even Whatsapp. Sounds good, isn't it?

The reality is, unfortunately, not so good. First of all, usability is... meh compared to Signal. As long as you use Element, the "official" client, otherwise it's even worse. Nothing too wrong with Element, but the number of options and features is overwhelming for the newcomer, takes some time to get used. I think even I haven't fully grasped how multi-device sync works...

But that's not the full story. There is, generally speaking, a bunch of reasons to not trust Matrix completely, which in essence boil down to: difficult to decentralize. Even if Matrix is theoretically federated, in practice running your own server is such a PITA that only large organizations can afford it, due to resource costs and difficulty in maintenance. Running a Matrix server is heavy, requiring generally many Gigabytes of storage per user.

I do use Matrix, but it's not my favourite IM. You can reach out at @tomgag:aria-net[DOT]org .

Jabber/XMPP

The grandpa of secure, federated IM, but still rockin'. First of all: You should not call it "Jabber" anymore, that's the old name, the official name since 2002 is XMPP (Extensible Messaging and Presence Protocol). XMPP is the backbone of a plethora of IM systems. Unlike Matrix, which aims at offering a fully fledged experience out-of-the-box, XMPP aims at being lightweight and extensible through plugins. This has the advantage that hosting your own server is very easy: compared to Matrix, the effort and requirements are minimal. Also, because it is a completely open specification, it has been adopted over time by many government and no-profit entities (rescue operations, military, NGOs, etc) as "the" open standard of choice. The disadvantage is mainly that, because the adoption of extensions is so fragmented, there is no "uniform" user experience: things that are possible with one client (e.g. audio/video calls) might not be possible with another, or might not be supported by some servers.

Speaking of XMPP security, even encryption was not meant to be "default", and was added as an optional extension. The modern standard for XMPP encryption is OMEMO which is, roughly speaking, equivalent to Signal (with caveats, lot of them, yes, I know).

And this is where the fight starts!

There are very strong opinions favoring XMPP over Signal or vice versa. I admire Soatok and I think he's right on many things. I also think he's wrong on many other things, including this one. I agree more with this one instead.

I do use XMPP and I love it. You can reach out at xmpp:tomgag[AT]draugr.de (I do not host my own server yet, but I will probably do at some point). I use Gajim as a desktop client for linux, and Conversations.im on mobile (Google-less Graphene OS) and they both work perfectly for me: text, audio, video, whatever. Also Shufflecake's official chat is hosted on XMPP, so drop by to say hi!

Summing up, in my personal opinion: XMPP > Signal > Matrix > Anything else. The situation is not optimal:

- Signal is centralized.

- Matrix is pretty much centralized, and has a shady ecosystem.

- XMPP has non-uniform user experience, things might not work, adoption is scarce, cryptography is less good than Signal's.

Is there really no better alternative in 2025?

Simplex

This is pretty new and I haven't tested it in depth yet, but I've read great reviews about it. I will keep this updated. Stay tuned!

Lastly, how about P2P IM? I don't know of many (Briar?), feel free to suggest one if you care, but the problem with P2P systems for IM is that, in general, users can only communicate if they are online at the same time. Which might be acceptable in some use cases, but it's not a solution that will cover most users' needs.

I hope this was useful to you, or at least clarified a few doubts. Just don't use shitty IM, especially if owned by some psychopathic rich individual, it is bad for you and humanity!

(Jan 1st, 2025) Announcement: I'm starting a new collaboration with Horizen Labs!

New year, new life! After exactly 6 years of service, yesterday saw the end of my employment at Kudelski Security. Effective immediately, I am joining Horizen Labs in the role of Senior Cryptography Researcher.

I want first to remark how grateful I am to Kudelski Security and my amazing ex-colleagues for these awesome 6 years, it was really a good ride! But I felt it was time for a change, and I found in Horizen Labs a great opportunity to expand my knowledge in cryptography. In my new role, I will help to research, develop and optimize zero-knowledge proof systems such as STARKs and SNARKs. This is an immensely complex field which has always fascinated me, and finally I have the opportunity to dig deep into it! I find the topic of ZKP interesting regardless of the cryptocurrency applications, as I believe many privacy-friendly technologies can benefit from these schemes, but I also liked the pragmatic mindset and the good atmosphere at my new team. I am looking forward to tackling new challenges!

For the record: I am not relocating, I will still be based in Zurich, but I will visit Milan occasionally, where Horizen Labs has an office where most of the tech people are.

(Aug 28th, 2024) A retrospective rant about (some of) DEF CON Villages

Finally I have some time to report about my recent trip to the US for attending Black Hat and DEF CON. I also went on an AMAZING (but short) solo road adventure after DEF CON, which was probably the best part of the trip, but I don't want to talk about that. I want to talk about DEF CON, and especially (some of) the villages. The reader shall pardon the unusual writing style that I will adopt for this post, definitely unprofessional and more akin to a rant from an internet troll than to an objective and rational argument. Just for the lulz.

First of all, it must be stressed that I'm no DEF CON veteran, in fact my first DEF CON was only last year; I am therefore in no position to assess the "trend" or compare this year's event to the "history" of this amazing convention. I am also in no position to comment about this year's badge drama, because I don't think I have enough information about that to form myself an opinion. But, as a single data point, this year's DEF CON felt to me "less cool" than last year's. Maybe the novelty factor, or (more likely) the new venue. Having to drive through the Loop to reach the venue on "public transport" from the Strip? Brrr... cringe. Also, how the f*** is it possible that all the 2XL t-shirts are sold out during the first 23 minutes from open doors? I mean, I don't want to imply anything with this but... you are selling merch at DEF CON... you should know your average customer... May I kindly suggest you to have the BULK of your merch in 2XL and 3XL size and only have a smaller amount of the rest?

But let's talk about something else: the villages. From my understanding, DEF CON villages became more and more prominent over the last years, as a "more inclusive" way to create community. Which is cool, and I'm sure that there are *some* villages that are tremendously fun and interesting. Unfortunately, that did not apply to the two villages I was mainly interested in.

One of these two villages, let's call it the "sci-fi village", is pure crap. No joke. But I was aware of it, because I visited it last year already, so I had no big expectations. To be fair, due to the very nature of the "sci-fi village", it's a bit difficult to have activities/talks that are both engaging AND technically interesting, and moreover, this year I didn't spend too much time there, so it's definitely possible that the situation improved, or is going to improve in the future.

The second village, instead, let's call it the "super-security village", or SSV. I missed it last year, but I've heard good stories about it, so I decided I need to show up and, dunno... get involved? Get "included"? Offer volunteering help for next year's edition maybe, or even submit a talk or propose something cool. For sure I should bring there my Shufflecake stickers! I mean, this is DEF CON, it's STICKER-CON! Everyone LOVES stickers at DEF CON, and I'm sure the SSV will be happy to have Shufflecake stickers around!

Full of trepidation, I wear my Shufflecake t-shirt and follow the map to the location of this village inside the enormous venue. The first impression is already... disappointing. The SSV has been allocated one of the largest village spaces: there is a stage, desks for the organizers, a lot of tables around, even some large and well-made custom props. And it is... almost empty. Nobody on stage, maybe 10 people all around, most of them look like organizers. The tables are dark and empty.

I put on my most genuine smile and approach the desks of the organizers, who... ignore me. They keep chatting among themselves with an aura of boredom, or keep staring at their phones and laptops. I keep smiling and nodding around with no success. After some time I gather my courage: "Ah-ehm... hello?". A guy maybe half my age slowly raises his head to me, his look is the cosmic emptiness of enthusiasm and interest. "Hi, my name is Tommaso, I'm new to this village but I also love super-security! So, I was wondering... what do you people do here, what is there to see?".

The guy picks up a napkin from a pile. Oh wait, that's not a napkin, it's actually a printed leaflet folded in half? He hands it over to me. "You see, this is the SSV, we do super-security. You know, this and this. And this is the program". The program looks... amateurish? To put it nicely. "Intro to super-security". "How to make your phone super-secure". "Surprise talk by a university professor". WTF? And the program is... sparse, there are, like, only two talks this morning.

I must try hard not to be snobbish, because I would hate a snob myself. So I keep smiling and say "Ah cool, you know, I'm a super-security practitioner myself. Actually, may I leave some of these stickers around? Have you ever heard about Shufflecake? No? TrueCrypt? Neither? OK, this is... an open-source software to... encrypt your disk and... it's developed by a friend of mine and myself and... we published a paper at CCS last year and... I thought..." (my words fumble as I see the expression of boredom on the guy turning first to confusion and then to skepticism) "I thought... this would be perfect for the SSV... maybe some people are interested... this is the website... what do you think?".

"Weeeell" says the guy, crossing his arms on the chest and leaning back while pulling his chair away from me. "You know, I cannot... we cannot... endorse third-party software. Understand?".

STICKER-CON, YOU ASS, THIS IS STICKER-CON! IS THIS THE WAY TO WELCOME ME?

"Uh... Oooook... I see... May I... leave the stickers on the tables around here then?". The guy pulls a nervous smirk. "Umph! Well, I'm not POLICING THE TABLES... YET."

I am OUTRAGED and I leave the place. I have never been so coldly welcomed at a private party to which I was not invited. I decide to have a look at their website.

- Outdated ("past talks" 2020).

- Full of JavaScript that would make RMS scream in fear.

- A Google Calendar form for showing the program.

- All organizers listed as their Twitter handles only.

- "Store" section.

- "Subscribe to our YouTube and Twitch channels so you don’t miss out!"

WHAT IN THE DEPTHS OF HELL AM I SEEING HERE?

I resolve to write this ranting blog post and I keep enjoying my DEF CON. But the day after, I change my mind: I should not be an asshole just because I met a weird guy, maybe he was not in a good mood, or jet-lagged, or under drugs, or whatever. I cannot judge the SSV just by my interaction with ONE of their organizers - provided he was an organizer indeed, and not a random volunteer. So I decide to try again, with renewed enthusiasm!

I go to the SSV again and the situation is like the day before. But I don't see yesterday's guy, so maybe I will have better luck today! I approach again the desks of the organizers, and this time I am welcomed by another 20-something guy who seems actually friendly and full of enthusiasm!

"Hey, welcome to the SSV! Check our program and if you have any questions just ask!" Oh yes, this is going well. "Hi, I'm Tommaso and, this is my first time at this village but I am a super-security practitioner myself and..." "OOOHHH SERIOUSLY? Then you absolutely have to talk with [random name], he's also an expert in super-security, you know... HE STUDIED MATH AT THE UNIVERSITY!" and he made his eyes as big as golf balls while saying that. "Uhhh... sure, I'd be happy to talk with him... but for now I just wanted to understand, what kind of talks or activities are you people interested in? You know, I was considering contributing next year". "Ah, in this case you MUST ABSOLUTELY talk with [name2] who's main organizer, come with me, I'll introduce you".

The guy walks me along the tables, while I am wondering what is exactly the meaning of "introducing me" since I barely said a word about myself. I have the feeling that the guy just wants to get rid of me quickly, but whatever. He points me to another human being sitting there doing something at the computer and then says "Hey, this guy here wants to talk about talks, he's a super-security practitioner." and then he leaves. The other human being looks at me surprised and says "Oh, hello there!". But before we could even start a conversation, a bunch of other people arrives, interrupts us and (ignoring me) start to talk with the organizer, who quickly apologizes to me "I'll be around later, excuse me" and leaves with the other bunch of people.

I can't even. Are all zoomers like this nowadays? I feel... old.

Humiliated, I go back to the friendly guy. "Hey, how did it go?". "Well... busy times I guess... I'll talk later with [name2]. But may I ask you... Are you one of the organizers?". "Oh no" the guy says, "I'm only a volunteer, I help to run things!". "OK" I say, "and how did you get involved?". I'm asking this to the guy to understand how this social group was formed. Because, so far, to me it looks like these are a bunch of stoned "internet friends" who somehow managed to scam DEF CON into conceding them their "private corner" (either that or they are Dogecoin millionnaires and paid for the space themselves), and are not really interested in "including" other people at all.

"Well, I didn't know anyone at first, but then I joined their chat and I got to know people. The chat is basically the main thing where the community lives, we all know each other there. YOU SHOULD JOIN OUR DISCORD AS WELL IF YOU WANT TO CONTRIBUTE!"

D-I-S-C-O-R-D? I mean, you are the SUPER-SECURE VILLAGE and you have your community ON DISCORD? Why not FACEBOOK???

And this was the straw that broke the camel's back, so I changed my mind AGAIN and I decided to write this post.

I conclude with two thoughts. The first one is a story of my time back during my PhD in Darmstadt. Every time we would go out for lunch, we would cross a part of the residential neighborhood, and we would pass in front of a super-typical "Quartiersstube", one of countless many in German towns. I had never been into one, so I asked some of my German colleagues to go there. "Are you kidding?" they replied. "You just... cannot go inside there... that's not for you... I would not go there myself! You must be... born and raised here, in 'da 'hood... or a friend of a friend...". I did not understand. It was a public venue after all. So, one day, I decided to go there alone and order a beer. Let me just say that I got my beer, but the way I felt at the SSV was more or less the same I felt inside that pub.

The second thought is, what price is reasonable to pay for "inclusiveness" at a venue like DEF CON? And by "inclusiveness" I do not mean stuff like, welcoming everyone regardless of sexual identity, skin color, etc, that's something I give for granted and, hopefully, we will be able to give for granted more and more in the future. What I mean is that, at the end of the day, it's a hacker conference, people pay to go there and expect a certain level of technical involvement. It's fine to have a "intro to XYZ" session for n00bs, but if more than half of your program is like that, then I question you belonging to this "hacker conference".

In any case, this is just a very un-serious rant about my personal, subjective experience. I am sure I was just unlucky and next year it will be much better. Would you accept a technical talk submission by a humble super-security practitioner? Please welcome me better next time, I have expectations.

But I'm still not joining your Discord.

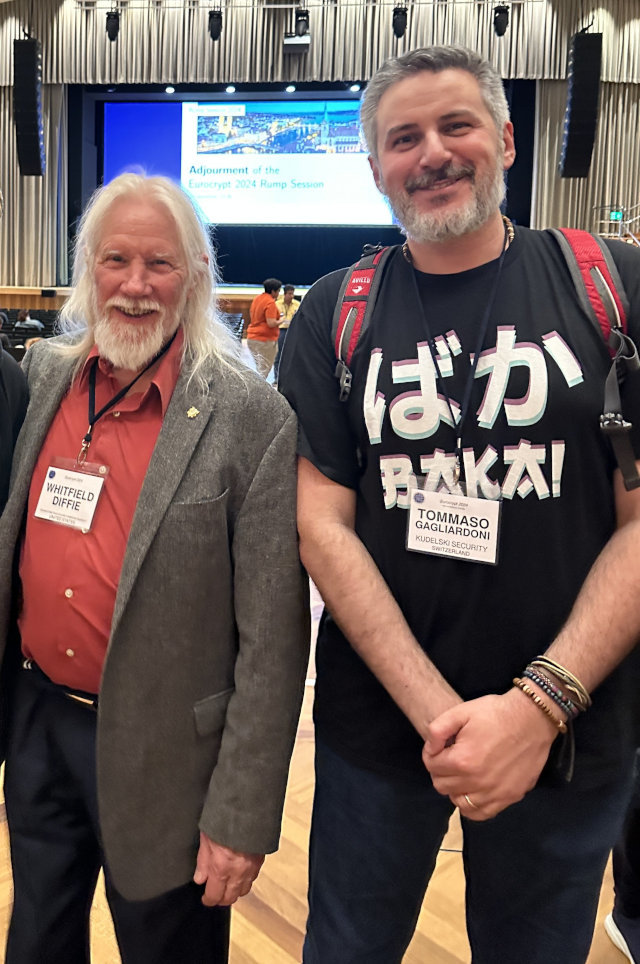

(May 30th, 2024) How I Put my Foot in my Mouth with Whit Diffie at Eurocrypt 2024

Yesterday night I was attending the rump session at Eurocrypt 2024 in Zurich. Whit Diffie (yes, that Whit Diffie) was there and, during one of the breaks, he very kindly allowed people to queue up in line to take pictures with him. For a second I considered how kind of unfair it was, having a multitude of super-world-famous cryptographers all there at the venue, and still people only wanted to take pictures with him, but, I mean, Whit Diffie is Whit Diffie after all! So I lined up as well, and when my turn came I asked if I could have a picture next to him. "It would be a honor", I said, and he smiled and nodded back. Then I took place next to him, while another person was taking the picture with a phone. And then I told him something.

What I intended to say was something on the lines of:

I've been in this field for so long, and yet I never managed to take a picture with you before, thank you so much!

What I did say instead, because I was distracted by someone talking at me and then I slipped my tongue, was:

I've been in this field for so long!

He laughed at me and said "Yeah, I've been for longer!".

And then spaghetti fell down from my pockets all around me. It really was a honor!

(Feb 8th, 2024) Another Change of Host

I'm temporarily moving this website to a different host, still with Hetzner but I'm playing around a bit. SSL certificates will soon change as well. The new IP will be 49.13.21.214

(Jan 25th, 2024) ML Model Collapse as Radionuclide Contamination in Post-War Steel

I keep hearing that ML models such as LLMs and other transformers are prone to "model collapse", i.e., the process according to which the behavior of these models degrades over time due to the progressive ingestion during the training phase of more and more ML-generated content. For example, the more and more ChatGPT is used, the more and more the Internet gets flooded with ChatGPT-generated content, and therefore subsequent iterations of ChatGPT are trained with less human-made text and more, lower-quality, machine-generated text. Trash in, trash out.

It looks like this problem is here to stay and it is so bad that, apparently, some AI companies are already scraping the Internet Archive for pre-ChatGPT content. This phenomenon gives a huge advantage to companies like OpenAI, which entered the business first and have therefore made it in time to stock large datasets of "pure" data.

Here is a random thought. This feels similar to the search for low-background steel in radio-sensitive detectors and applications. This steel is scarce and precious, usually salvaged from pre-1940 sunken vessels - just like data scraped at low-bandwidth from the Internet Archive.

I hope that, just like the need of this pre-war steel became mostly obsolete after the nuclear ban treaties, we will reach at some point some form of AI treaty which will make it possible to flag most of machine-generated content as such. Technically, I have no idea how to do that, but life taught me to not underestimate the power of social norms and regulations, so who knows?

(Nov 13th, 2023) A Trick to Speed up RSA Modulus Generation

Recently I had reasons to recall something that happened in 2019 at Kudelski Security during some client project. At some point there was a discussion on how to speed up the generation of RSA keys. As you might know, you need two primes roughly of the same size, p and q, but they cannot be "any" primes, they must be (among other things) "safe primes". In particular, they must have the property that (p-1)/2 and (q-1)/2 are still prime. And this is annoying, because first you must spend a lot of CPU time generating a large prime, and then maybe you have to discard it because when you check the condition above it doesn't hold. That's a big bottleneck of RSA key generation.

Then I made the observation (and I cannot believe I'm the first one to have made such observation) that for this property to hold, the last 2 LSB of the prime must *both* be 1, because large prime implies odd, so modulo 2 must give result 1 both for the candidate prime and its half. So, having these 2 LSB both set to 1 is a very minimal condition to ensure that your numbers are candidate safe primes.

The way this was implemented in the project we were working on (e.g., for a 2048-bit RSA key, so 1024-bit primes) was the following:

- Generate a random 1024-bit string.

- Set the first MSB and the last two LSB to 1.

- Test the 1024-bit number obtained for primality; if fail then goto 1.

- Right-shift this 1024-bit number by one bit.

- Test the 1023-bit number so obtained for primality; if fail then goto 1.

- Return the 1024-bit number as a safe prime.

Which is cool but I made the following observation. The method below is probably faster in most cases:

- Generate a random 1023-bit string.

- Set the first MSB and the last LSB to 1.

- Test the 1023-bit number obtained for primality; if fail then goto 1.

- Left-shift this 1023-bit number by one bit and set the LSB to 1 again.

- Test the 1024-bit number so obtained for primality; if fail then goto 1.

- Return the 1024-bit number as a safe prime.

This algorithm looks very similar to the first one above, and for sure it produces the same output, but it's not the same. In fact, when we tested it, it was indeed slightly faster than the first one.

The intuition is the following: testing a 1024-bit odd number for primality is slightly more expensive (and slightly less likely to succeed) than testing a 1023-bit one. You might arguably say that the difference should be minimal (and you'd be right in general), but these 1024-bit numbers are not really random odd numbers: they are all set to be 3 modulo 4, which means that the probability of one of them being prime is even less than what you would expect for a random 1024-bit odd number. So this is to say that, in both algorithms above, the 1024-bit testing is actually quite trickier than the 1023-bit testing.

In the first algorithm you do "the hard work first", by repeatedly doing the 1024-bit testing. But then, when you finally have a positive match, you are not guaranteed that the right-shifted 1023-bit number obtained is still prime - in fact, that's a small chance of it happening. In the second algorithm, instead, first you do a lot of 1023-bit testing, and for each candidate you do the "difficult" 1024-bit testing. The overall number of primality tests is roughly the same, but the difference is that in the first algorithm you do many "hard" tests and few "easy" ones, while in the second algorithm you do many "easier" tests and few "hard" ones.

This difference is enough to be detectable. I don't remember exactly the numbers but I seem to recall something like 2% on a Go implementation - feel free to correct me. So, keep this in mind when you implement your own RSA key generation cryptography - just kidding, don't do that!

Also, this is n00b stuff, I'm sure there is zillion of better ways to generate RSA primes in a much more efficient way.

(Oct 7th, 2023) Shufflecake Accepted At ACM CCS 2023

I can finally break the confidentiality embargo and proudly give the big announcement: The Shufflecake research paper (coauthored with Elia Anzuoni) has been accepted at ACM CCS 2023! We are super excited to present Shufflecake at one of the most prestigious cybersecurity conferences worldwide. A 50-page full version will be available in the next couple of days both on ArXiv and IACR's Eprint. Check the Shufflecake website or the Shufflecake Mastodon for news.

There is a lot of "meat" in the full version, especially regarding future works and planned features. The most pressing point, as previously explained, is the topic of crash consistency. We have ideas on how to do that, but we are still working on implementations.

Many people will probably find the discussion on the topic of multi-snapshot security most interesting. We know that in order to achieve multi-snapshot security we NEED to use WoORAMs, but they are very slow. Our insight is to try to achieve a weaker version, "operational" multi-snapshot security, which (we argue) should be enough in real-world scenarios.

But my favourite topic, and one that I think has been foolishly overlooked in previous literature is the topic of "safewords". What is a safeword in the context of plausible deniability? It's the idea that a user might want to have the "last resort" possibility to surrender to an adversary and prove that she has nothing to hide. The concept would be: If I'm caught by my CS teacher doing non-school activities on my laptop, I can use plausible deniability and be un-prosecutable in front of the principal, but if the police comes knocking at my door, I don't want to get in trouble and I want to comply - and PROVE that I am complying.

With TrueCrypt this is easy: just give up the decoy password to the principal and the real password to the police. In Shufflecake and similar systems that's not so easy, because there are many layers of nested secrecy. So "the adversary does not know when he can stop torturing you" because he cannot trust you whatever you say.

Is this good or bad?

In the paper we make the following points.

- It is actually possible to implement a safeword even on systems such as Shufflecake. It's an easy trick really.

- The idea of a safeword is SUPER BAD for plausible deniability. Not only you should NOT USE a safeword, but the mere POSSIBILITY of having a safeword degrades the operational security of the scheme.

- This problem does not seem to have been addressed before on existing constructions, as all of them (even WoORAM-based ones) have the implicit possibility of creating a safeword.

- We propose an idea to make Shufflecake "safeword-free"! This is future work but it's definitely going to be implemented at some point. The idea is that we can make ANY kind of safeword-like feature impossible by implementing a nice hack to have potentially INFINITE nested volumes (rather than limited by an artificial hard-limit, which is 15 in the current implementation).

I want to expand a bit here on the last idea. Nothing of what I write down here has to be intended as rigorous, this is just the high level intuition. STRICTLY FUTURE WORK!

Currently, Shufflecake packs all the headers (DMB and 15 VMBs) at the beginning of the storage space, and data sections come afterwards in the form of 1 MiB slices. If we want to have the possibility, at least in theory, of having an unbounded number of volumes, then we need an infinite (subject to total storage space) number of headers, and hence we cannot pack them at the beginning of the disk. Clearly, most of these headers will actually be "bogus", i.e. they will not be headers at all, but the adversary should never be sure about that.

So my idea was the following: imagine we don't have a DMB and 15 VMBs, but just a unified, per-volume "header" (encapsulating everything we need for opening the volume, including its encrypted position map), and suppose that this header is one slice large. What we can do is: having the first header (for the first volume) at the beginning of the disk, and the others at random positions across the disk space, interleaved between the other data slices. If everything is encrypted, the adversary cannot tell whether a slice is a data slice or a header, and hence cannot tell exactly how many volumes are there, or even what MAXIMUM number of volumes there can be.

This is cool, it makes safewords impossible! No artificial limit on the maximum number of volumes. But there are challenges.

First of all, how do we "navigate" this chain of headers? We know where the first one is, but what about the others? The idea is to have every header pointing at the position of the next one through a dedicated field. But how about this field? We cannot encrypt it: We would need to encrypt it with the password of the CURRENT header, but then it would be useless for plausible deniability (the adversary will know the position of the next header). We could encrypt it with the password of the next header then. This works if we only have two volumes, but what if we have three instead, and we want to unlock the third one? There would be an unnavigable "gap" in the chain.

My solution is simple: this pointer field is simply left unencrypted, but ANY random string could be a valid pointer. In practice, the concrete idea is to have a random value in the header, say 128 bit, and use it as an input to a linear function which maps uniformly to all slices of the disk: the string "0x0..0" would be the first slice, while the string "0xF...F" would be the last slice, and anything in between would point to other slices linearly. Clearly, random values that are close to each other would point to the same slice, but this is OK. The observation now is the following: even without having any password, the adversary can navigate from the first header to the position of the second, to the third, and so on, but she will not know when to stop, because there will be no way of knowing when the "real" chain is over! The adversary would keep jumping back and forth on the disk like crazy, but no guess on the number of volumes!

Except... three problems.

The first problem is that there actually IS a way for the adversary to know that, starting from a certain point, surely the chain is finished, and she is now inspecting data slices rather than headers. This happens if there is a loop. No header can point to a previous one, so if this random value points "backwards", then we know that something is wrong. But notice that headers will actually be a negligible amount of the disk space, so the chance that a random value points to one of the existing headers is negligible. Sure, it will happen sooner or later along the chain, but we cannot know in advance when. This is sufficient to argue that: in no way a user could use a safeword to "limit" the growth of this chain.

The second problem is: what happens if, during use, modification of a data slice "breaks" the chain? Well, also not a big deal: Notice that Shufflecake would know exactly the position of all unlocked headers, and would treat those slices as permanently occupied, so no risk of overwriting one of those. The only possibility would be to overwrite a "bogus" header, thereby breaking the chain of fake headers. But for the adversary, without the right password, everything is a link of the chain, even the new (encrypted) data slice! So, all that changes is the chain, which (after that slice) might now point to completely new areas of the disk, but this doesn't break anything for the user.

But, wait, then could the adversary know how many "real" headers are there by looking at the state of the chain over time? Yes, this is possible: Every time the adversary sees a change of chain at link N, she knows that the number of volumes is AT MOST N-1, and every observation can shrink down this estimate. This is a problem, but it depends on how often the data slices change, and anyway we are now in the multi-snapshot regime. Things become quickly complicated here, but also solutions multiply. We can use re-randomization (since we use AES-CTR), or we could do some brute-forcing to make sure that the random pointer lands somewhere we want. In any case, the accuracy to which an adversary identifies the real number of headers depends on the number of snapshots she has, which cannot be too many because TRIM is not an ever-growing blockchain...

The last problem is: how about the slice maps? Those are usually larger than a single slice, they can't fit into a single header so defined. True, but nothing changes if the header is 2 slices large, or 3, 4, 5, etc. Just use the same schema: every slice of a header points to the next one, and the last slice points to the first slice of the next header, and so on. This also has the advantage that we can use more or less slices for a header, depending on the size of the device. We also can embed as much volume metadata as we want.

Cool stuff!

(Aug 17th, 2023) Back From Vegas, Black Hat, DEF CON, Shufflecake News, Etc...

Let's try to have a proper post for once after a long time. I'm back from Vegas, from my first Vegas. It was tiring, it was productive, it was exciting, but mostly it was so that I hope it won't be my last Vegas, because I really enjoyed it! Let's see what happened.

I arrived on Tuesday, Aug 8th in the early evening, after a long, boring and uneventful direct flight. The first impression of the city itself was as I expected it: fake, but in a kind of friendly way. Clearly, this only holds for The Strip, which is pretty much the only part I saw. Time was short and packed. I was there to attend Black Hat and DEF CON, my first time for both of them.

I met with other folks from Kudelski Security. The first thing I learned about Black Hat is that the conference itself is just half of the fun: in the evenings, every evening, people go to "parties", which are more or less exclusive networking events with cheap booze and finger food. So, as soon as I arrived at my hotel I barely had the time of changing myself and go to the first evening party of the week! It was good: I quickly entered "undead mode" and managed to stand straight on my feet until around 10 PM, at which point the reanimation spell which was keeping me going faded, and I barely had the energy to crawl back to the hotel and crash. I still woke up at 5 AM and went to have "burger breakfast" at the casino downstairs with a colleague who was as jet-lagged as I was. Reading twice what I just wrote it sounds super weird but, hey, it's Vegas!

Wednesday Black Hat itself started. If I have to describe it with a single word I would call it grandiose. I walked so much my feet still hurt, I am not used to a couple of miles just to walk from the breakfast "room" (more "arena") to the talk room. I have to say, I didn't enjoy Black Hat too much. Too "corporate", talks were less interesting than I expected, and the sight of business people in the vendor booths trying to attract you and subscribe you to their mailing list in exchange for cheap goodies made me feel very sad for them. The other parties I went to varied greatly in terms of quality and fun. I had good times with my colleagues, had my taste of American cuisine, but overall I would not define the first days at Black Hat as very interesting.

DEF CON, on the other hand, was a blast!