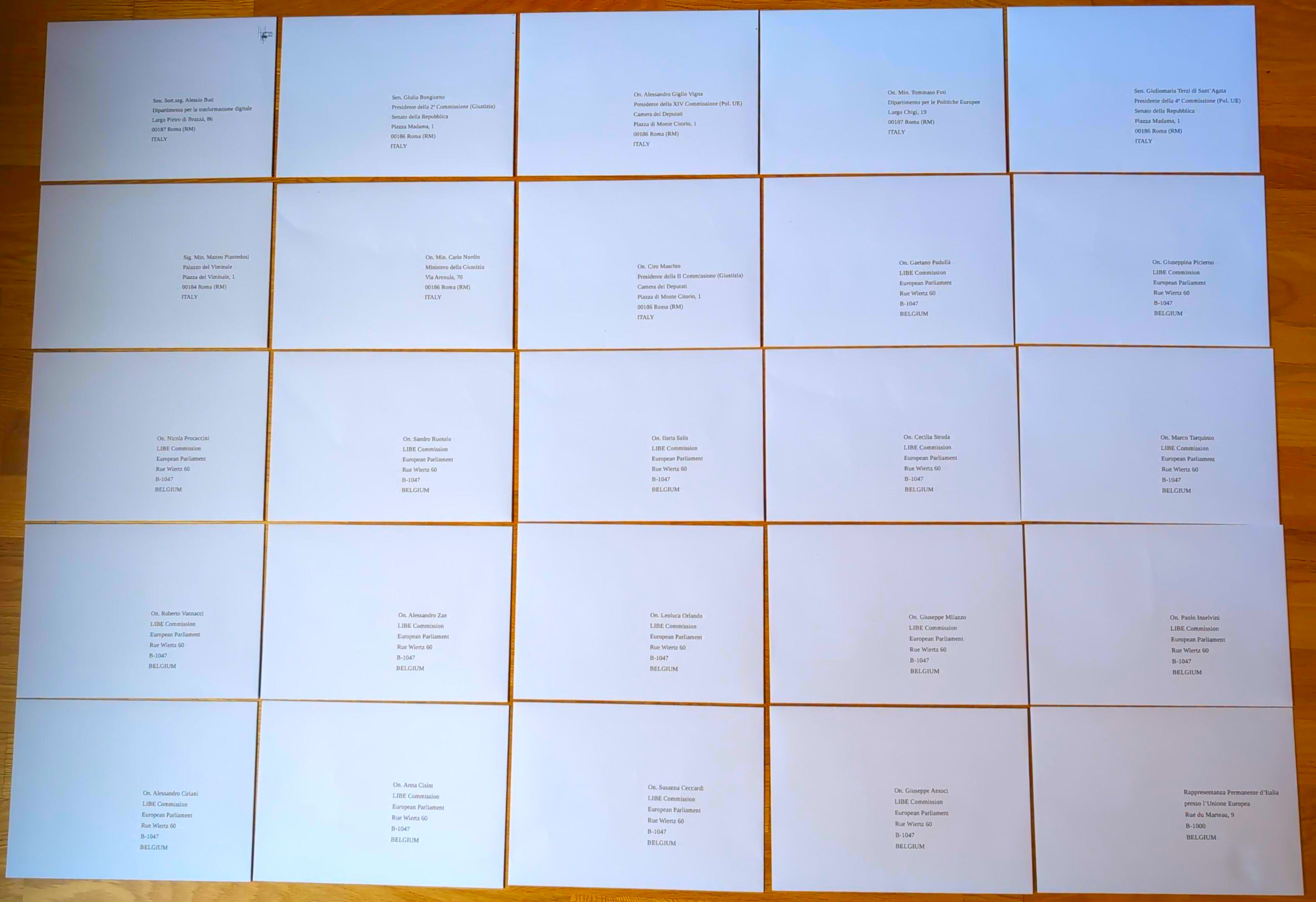

I'm not saying this will have any meaningful impact, but I don't want to "talk the talk but not walk the walk," so I decided to take action. Today, I sent a series of emails with a formal tone to many members of the Italian government, parliament, and representatives to the EU, to express my concerns about the EU proposal for client-side CSAM scanning (colloquially known as "ChatControl").

I have already called for action on my Mastodon and LinkedIn profiles, and this is my small contribution to the issue. I think there is no need to articulate here why I believe the proposal is dangerous and short-sighted, as others have already done it better than I ever could (for example, Patrick Breyer). What I want to do here, instead, is twofold:

- Provide templates of the emails and the contact addresses to send them to, in the hope that you, my fellow Italians, will also want to contribute. While Gemini helped me a bit (and, just to be clear, all blog posts on this page are human-generated only), collecting the addresses, double-checking the references, and fine-tuning the text still took me some time. So, may this save some of yours.

- I will provide updates in future posts on who replies to my emails and how. To safeguard their privacy, I will not quote the exact text of the replies, except maybe for short excerpts. However, I want to at least share with you which of the politicians I contacted take the time to respond to a common citizen's plea, and what their positions on this important matter are.

So, stay tuned! In the meantime, here are the texts of the four emails I sent. Feel free to use the text/template to email your favourite politicians, I posted them here exactly for that reason, no need to ask me permission!

Email 1: to the Italian Government

Topic: Opposizione alla proposta 'ChatControl' - File 2022/0155 (COD)

To: gabinetto.ministro@interno.it , protocollo.gabinetto@giustizia.it , info.affarieuropei@governo.it , segreteria.butti@governo.it

Body:

Alla cortese attenzione del Signor Ministro dell'Interno, Matteo Piantedosi

e, per conoscenza,

all'Onorevole Ministro della Giustizia, Carlo Nordio

all'Onorevole Ministro per gli Affari Europei, Tommaso Foti

al Senatore Sottosegretario per l'Innovazione tecnologica, Alessio Butti

Illustrissimi Ministri, Senatore Sottosegretario,

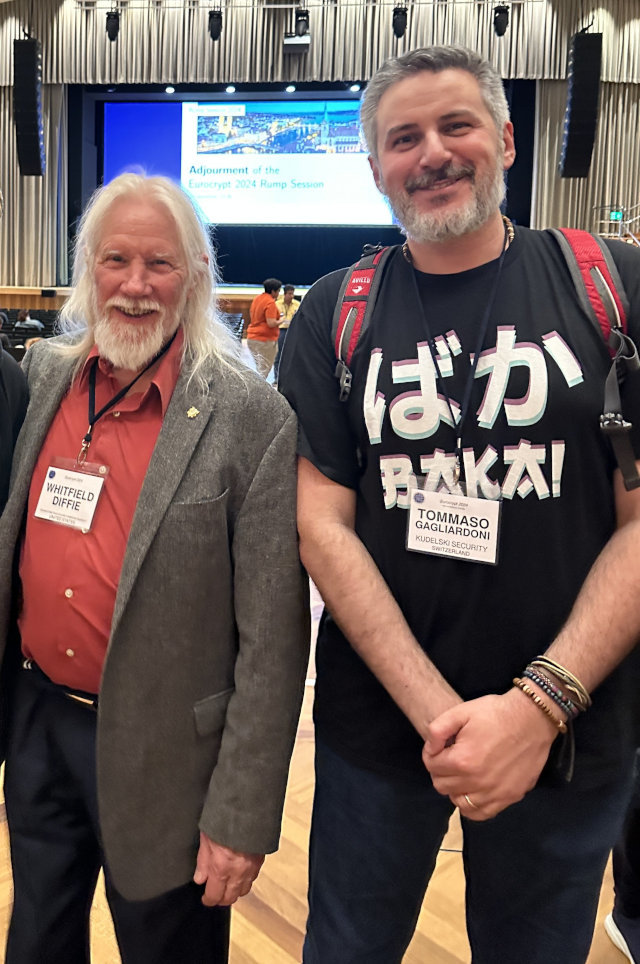

Mi chiamo Tommaso Gagliardoni. Scrivo in qualità di cittadino italiano e di professionista nel campo della crittografia e della sicurezza informatica. Maggiori informazioni sul mio profilo professionale sono disponibili ai seguenti indirizzi:

[link to my CV and my LinkedIn profile]

La presente per esprimere la mia profonda preoccupazione riguardo al documento del Consiglio dell'Unione Europea 10131/25, parte del file legislativo 2022/0155 (COD) relativo alla "Proposta di Regolamento per la prevenzione e la lotta contro l'abuso sessuale sui minori", altresì nota come "ChatControl".

Apprendo che, in sede di Consiglio, la posizione preliminare del Governo italiano sia stata favorevole all'approvazione di tale norma. Desidero esprimere, con il massimo rispetto per le istituzioni che rappresentate, la mia ferma opposizione a questa misura. Come professionista del settore, ma anche come padre e cittadino europeo, ritengo che essa eroda le libertà fondamentali e la dignità di tutti, in nome della pur nobile intenzione di proteggere i minori.

La proposta "ChatControl" è largamente criticata dalla comunità internazionale di esperti come tecnicamente inefficace per gli scopi prefissati e, al contempo, pericolosa per la sicurezza collettiva. Nessuna delle soluzioni di scansione lato client ("client-side scanning") attualmente ipotizzate è esente da gravi vulnerabilità.

Da tecnico, posso assicurarVi che il potenziale di abuso di una simile tecnologia di sorveglianza di massa è enorme. Non si tratta di stabilire "se" verrà sfruttata per fini illeciti, ma solo "quando" verrà utilizzata per scopi autoritari o per operazioni di spionaggio e sabotaggio da parte di attori ostili, statali e non.

Per queste ragioni, mi appello alla Vostra responsabilità e Vi chiedo cortesemente che il Governo italiano voglia riconsiderare la propria posizione in vista del voto finale, opponendosi all'approvazione della proposta in seno al Consiglio dell'UE.

Invito le Vostre Signorie ad ascoltare le audizioni degli esperti tecnici e a salvaguardare l'infrastruttura di sicurezza informatica e la privacy dei cittadini, che costituiscono un bene primario per la nazione e per l'Unione tutta.

Ringraziando per l'attenzione, porgo i miei più distinti saluti.

Tommaso Gagliardoni

Email 2: to the Italian Parliament

Topic: Esame della Proposta UE "ChatControl" - File 2022/0155 (COD) - Richiesta di audizioni e parere contrario

To: maschio_c@camera.it , giglio_a@camera.it , giulia.bongiorno@senato.it , giulio.terzi@senato.it

Body:

Alla cortese attenzione dei Presidenti delle Commissioni Parlamentari

On. Ciro Maschio, Presidente della II Commissione (Giustizia), Camera dei Deputati

On. Alessandro Giglio Vigna, Presidente della XIV Commissione (Politiche dell'Unione Europea), Camera dei Deputati

Sen. Giulia Bongiorno, Presidente della 2ª Commissione (Giustizia), Senato della Repubblica

Sen. Giuliomaria Terzi di Sant'Agata, Presidente della 4ª Commissione (Politiche dell'Unione Europea), Senato della Repubblica

Illustrissimi Presidenti,

Mi chiamo Tommaso Gagliardoni. Scrivo in qualità di cittadino italiano e di professionista nel campo della crittografia e della sicurezza informatica. Maggiori informazioni sul mio profilo professionale sono disponibili ai seguenti indirizzi:

[link to my CV and my LinkedIn profile]

Mi rivolgo a Voi per il fondamentale ruolo di indirizzo e controllo che le Commissioni da Voi presiedute esercitano sull'operato del Governo in materia di legislazione europea.

La presente per sollecitare la Vostra attenzione sul documento del Consiglio 10131/25, parte del file legislativo 2022/0155 (COD), noto come "ChatControl".

Come noto, la proposta mira a introdurre forme di sorveglianza di massa e generalizzata delle comunicazioni digitali, incluse quelle protette da crittografia end-to-end. Apprendo che la posizione preliminare del Governo italiano in sede di Consiglio UE sia stata favorevole all'approvazione.

Tale orientamento desta profonda preoccupazione nella comunità tecnica e scientifica. La proposta "ChatControl" è largamente criticata da esperti internazionali come tecnicamente inefficace per gli scopi prefissati e, al contempo, pericolosa per la sicurezza collettiva. Le soluzioni di "client-side scanning" proposte creerebbero, di fatto, delle "backdoor" sistemiche, esponendo le comunicazioni di cittadini, imprese e della stessa Pubblica Amministrazione a rischi incalcolabili di spionaggio e sabotaggio da parte di attori ostili.

Il potenziale di abuso di una simile tecnologia è enorme e rappresenterebbe un precedente gravissimo, in palese contrasto con i principi di proporzionalità e necessità sanciti dai trattati europei e dalla nostra Costituzione.

In virtù del Vostro ruolo, Vi chiedo rispettosamente di voler:

1) Avviare un'approfondita istruttoria in seno alle Vostre Commissioni sulla proposta in oggetto, analizzandone le criticità tecniche e giuridiche.

2) Promuovere un ciclo di audizioni con esperti indipendenti di sicurezza informatica, crittografia e diritto delle nuove tecnologie, per acquisire un quadro completo e non filtrato dei rischi connessi.

3) Adottare una risoluzione che esprima un parere contrario all'attuale impianto della proposta, impegnando il Governo a modificare la propria posizione in sede di Consiglio dell'Unione Europea e a tutelare i diritti fondamentali e la sicurezza digitale dei cittadini italiani.

Confidando nel Vostro fondamentale ruolo di garanzia democratica, porgo i miei più distinti saluti.

Tommaso Gagliardoni

Email 3: to the Italian LIBE members at the European Parliament

Topic: Proposta "ChatControl" (2022/0155 COD) - Appello alla difesa della privacy e della sicurezza digitale

To: alessandro.zan@europarl.europa.eu , giuseppe.antoci@europarl.europa.eu , susanna.ceccardi@europarl.europa.eu , alessandro.ciriani@europarl.europa.eu , paolo.inselvini@europarl.europa.eu , ilaria.salis@europarl.europa.eu , cecilia.strada@europarl.europa.eu , anna.cisint@europarl.europa.eu , giuseppe.milazzo@europarl.europa.eu , leoluca.orlando@europarl.europa.eu , gaetano.pedulla@europarl.europa.eu , nicola.procaccini@europarl.europa.eu , giuseppina.picierno@europarl.europa.eu , sandro.ruotolo@europarl.europa.eu , marco.tarquinio@europarl.europa.eu , roberto.vannacci@europarl.europa.eu

Body:

Onorevoli Membri del Parlamento Europeo,

Mi chiamo Tommaso Gagliardoni. Scrivo in qualità di cittadino italiano ed europeo, e di professionista con esperienza nel campo della crittografia e della sicurezza informatica. Maggiori informazioni sul mio profilo professionale sono disponibili ai seguenti indirizzi:

[link to my CV and my LinkedIn profile]

Mi rivolgo a Voi nella Vostra cruciale funzione di membri della Commissione per le libertà civili, la giustizia e gli affari interni (LIBE), l'organo parlamentare con la responsabilità primaria su questo delicato dossier.

Con la presente vorrei esprimere la mia profonda preoccupazione riguardo la proposta di compromesso attualmente in discussione in Consiglio relativa al regolamento "ChatControl". Come Voi ben sapete, il testo minaccia di imporre obblighi di scansione generalizzata ("client-side scanning") anche sulle comunicazioni protette da crittografia end-to-end.

Una simile misura, se approvata, rappresenterebbe un attacco senza precedenti ai diritti fondamentali alla privacy e alla protezione dei dati, sanciti dagli articoli 7 e 8 della Carta dei Diritti Fondamentali dell'Unione Europea. Creerebbe inoltre una pericolosissima vulnerabilità strutturale nell'ecosistema digitale europeo, esponendo cittadini, imprese e istituzioni a rischi di sorveglianza e attacchi informatici su una scala mai vista prima.

La comunità accademica e tecnica internazionale è unanime nel condannare queste proposte come tecnicamente fallaci e intrinsecamente insicure. Non esiste una "via di mezzo" che permetta di indebolire la crittografia solo per i "cattivi": ogni backdoor creata per legge diventerà inevitabilmente un bersaglio per regimi autoritari e organizzazioni criminali.

Il Parlamento Europeo si è storicamente distinto come il più strenuo difensore dei diritti digitali dei cittadini. In questo momento decisivo, la Vostra voce e il Vostro voto sono fondamentali.

Vi esorto pertanto a:

1) Mantenere una linea di ferma opposizione a qualsiasi testo che includa forme di sorveglianza massiva e indiscriminata delle comunicazioni private.

2) Difendere l'integrità della crittografia end-to-end senza compromessi, quale strumento essenziale per la sicurezza, la libertà di espressione e la democrazia nell'era digitale.

3) Respingere in via definitiva la proposta, qualora essa dovesse giungere al Vostro esame senza le necessarie garanzie per i diritti fondamentali.

Confidando nel Vostro impegno a difesa dei valori fondanti dell'Unione Europea, porgo i miei più distinti saluti.

Tommaso Gagliardoni

Email 4: to the Permanent Representation of Italy to the EU

Topic: Osservazioni tecniche sulla proposta "ChatControl" - File legislativo 2022/0155 (COD)

To: rpue.coord@esteri.it

Body:

Alla cortese attenzione della Rappresentanza Permanente d'Italia presso l'Unione Europea,

Mi chiamo Tommaso Gagliardoni. Scrivo in qualità di cittadino italiano ed europeo e di professionista nel campo della crittografia e della sicurezza informatica. Maggiori informazioni sul mio profilo professionale sono disponibili ai seguenti indirizzi:

[link to my CV and my LinkedIn profile]

La presente per sottoporre alla Vostra attenzione alcune osservazioni di carattere tecnico e di sicurezza nazionale relative al file legislativo 2022/0155 (COD), noto come "ChatControl", e in particolare alla proposta di compromesso attualmente in discussione in sede di Consiglio.

Consapevole del Vostro ruolo cruciale nella negoziazione dei dossier legislativi in seno ai gruppi di lavoro del Consiglio, desidero evidenziare le gravi implicazioni che l'adozione di tale normativa comporterebbe per la sicurezza strategica nazionale ed europea.

Le soluzioni tecnologiche ipotizzate, basate su scansioni lato client ("client-side scanning"), richiedono per loro stessa natura un indebolimento sistemico delle garanzie offerte dalla crittografia end-to-end. Questo non rappresenta solo una minaccia per la privacy individuale, ma crea una vulnerabilità sistemica sfruttabile su larga scala che mette a rischio:

- La sicurezza delle comunicazioni governative e istituzionali.

- La protezione della proprietà intellettuale e dei segreti industriali delle imprese italiane ed europee.

- L'integrità delle infrastrutture critiche che si affidano a comunicazioni sicure.

Introdurre per legge una "backdoor" legalizzata, per quanto motivata da intenti lodevoli, equivale a creare un'arma cibernetica che potrebbe essere - e con ogni probabilità sarebbe - rivolta contro gli interessi nazionali da parte di attori statali ostili o di organizzazioni criminali ad alta capacità tecnologica. La comunità internazionale degli esperti di sicurezza è unanime nel considerare tale approccio tecnicamente insostenibile e pericoloso.

Si chiede pertanto che, nello svolgimento del Vostro mandato negoziale, la delegazione italiana:

1) Valuti appieno il rischio strategico per la sicurezza nazionale derivante da un indebolimento generalizzato della crittografia.

2) Si opponga fermamente a qualsiasi testo che imponga la scansione di massa delle comunicazioni private, in quanto tecnicamente incompatibile con un'efficace protezione delle infrastrutture digitali.

3) Trasmetta queste osservazioni tecniche ai competenti uffici ministeriali a Roma, affinché la posizione politica nazionale sia informata da una corretta e completa valutazione dei rischi tecnologici.

Certo che la tutela della sicurezza nazionale digitale sia una priorità per la nostra diplomazia, porgo i miei più distinti saluti.

Tommaso Gagliardoni